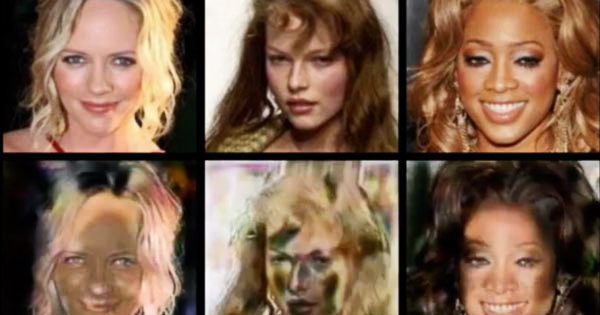

Scientists from Boston University have introduced a new algorithm for protection against deepfakes. Their filter modifies pixels so that videos and images become unsuitable for making fakes.

With the development of new technologies of deepfakes, it becomes much more difficult to determine the reliability of one or another information published on the Internet. Such technologies make it easy to manipulate images and videos through the use of neural networks that can simulate the speech, movement and appearance.

A este respecto, a group of scientists from Boston University, consisting of Nataniel Ruiz, Sarah Adel Bargal and Stan Sclaroff, has developed an algorithm that allows protecting images from being used as a basis for deepfakes.

“Given images of a person’s face, such systems can generate new images of that same person under different expressions and poses. Some systems can also modify targeted attributes such as hair color or age”, — write the scientists.

The algorithm imposes an invisible filter on the image or video, and if someone tries to use the neural network to modify these files, they either remain unchanged or become completely distorted or blurred, as filter changes the pixels so that the video and images become unsuitable for making deepfakes.

“In order to prevent a malicious user from generating modified images of a person without their consent we tackle the new problem of generating adversarial attacks against such image translation systems, which disrupt the resulting output image. We call this problem disrupting deepfakes”, — write scientists from Boston University.

The StarGAN, GANimation, pix2pixHD and CycleGAN networks are included – and the attacks can be adapted to any image translation network.

Blur algorithms, según los científicos, can provide successful protection against destruction and fake images.

The developers of the project posted the source code of the technology en GitHub, and also published a video demonstrating its work.

Let me remind you of another interesting attack: MyKingz botnet uses Taylor Swift photo to infect target machines. It is unlikely that any algorithms will protect against the use of celebrity images in criminal plans.